When it comes to conducting a thorough analysis of data, descriptive statistics play a crucial role in providing valuable insights and understanding trends. From summarizing data to identifying patterns and making predictions, descriptive statistics help researchers and analysts make sense of vast amounts of information. In this blog post, we will explore the basics of descriptive statistics, including its types, tools and techniques, as well as real-world examples and common pitfalls. By the end, you will have a solid understanding of how to utilize descriptive statistics for effective post-event analysis.

Introduction to Descriptive Statistics

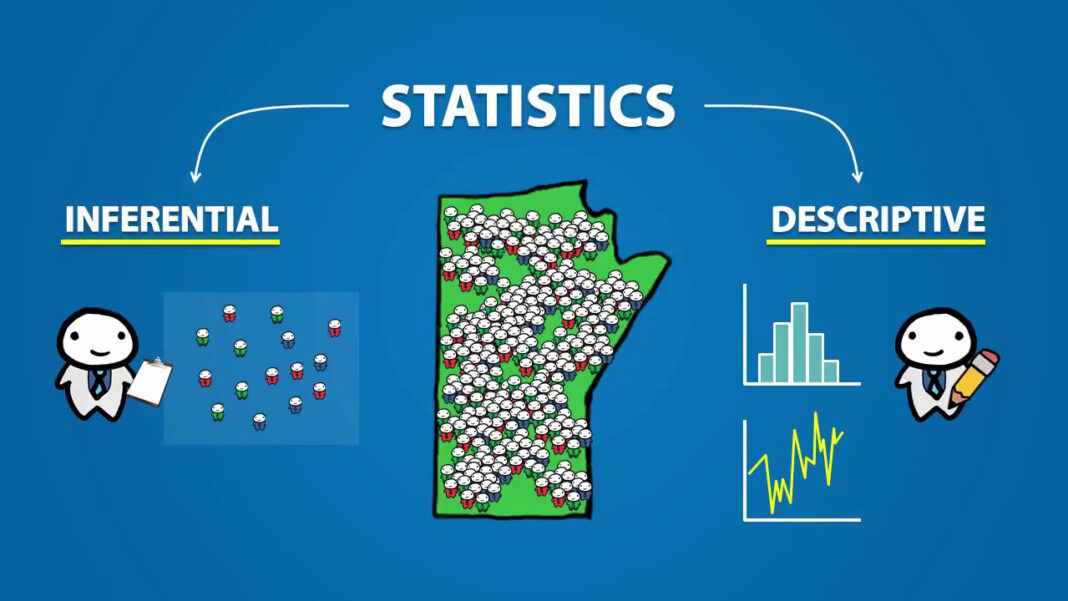

Descriptive statistics is a branch of statistics that deals with summarizing, organizing, and presenting data in a meaningful way. It is a crucial aspect of data analysis and plays a vital role in decision-making processes across various industries. In simple terms, descriptive statistics involves collecting and summarizing data using numerical measures and graphical representations. These measures help researchers and analysts identify patterns and trends within a dataset, making it easier to draw conclusions and make predictions.

There are two main types of descriptive statistics: measures of central tendency and measures of variability. Measures of central tendency provide an overview of the average or typical value in a dataset, while measures of variability indicate the spread or dispersion of the data points. Together, these two types of descriptive statistics provide a comprehensive understanding of a dataset and its underlying characteristics.

Types of Descriptive Statistics

Measures of Central Tendency

Measures of central tendency refer to the numerical measures that represent the center of a dataset. They are used to describe the average or typical value of a dataset and are essential in understanding the distribution of data points. The three most commonly used measures of central tendency are mean, median, and mode.

Mean

The mean, also known as the arithmetic average, is the sum of all the values in a dataset divided by the total number of values. It is the most commonly used measure of central tendency and is affected by extreme values in a dataset, also known as outliers. The formula for calculating the mean is as follows:

$$\bar $$

Collecting and Organizing Data

Before applying descriptive statistics techniques, it is essential to gather and organize data in a meaningful way. The first step in this process is to determine the type of data being collected. There are two main types of data: quantitative and qualitative.

Quantitative Data

Quantitative data refers to numerical data that can be counted or measured. It includes variables such as age, height, income, and temperature. Quantitative data can further be classified as discrete or continuous.

Discrete data consists of whole numbers, such as the number of siblings someone has. On the other hand, continuous data can take on any value within a range, such as the height of an individual.

To collect quantitative data, researchers use various techniques such as surveys, questionnaires, and experiments. Once collected, this data is typically organized into a frequency distribution table or graph, making it easier to identify patterns and trends.

Qualitative Data

Qualitative data, also known as categorical data, refers to non-numerical data that cannot be measured. It includes variables such as gender, occupation, and marital status. Qualitative data can further be classified as nominal or ordinal.

Nominal data consists of categories with no inherent order, such as eye color or nationality. On the other hand, ordinal data has a natural order, but the differences between categories may not be equal, such as education level (high school, bachelor’s degree, master’s degree).

To collect qualitative data, researchers often use techniques such as interviews, focus groups, and observation. Once collected, this data can be organized into frequency tables or bar charts for analysis.

Descriptive Statistics Tools and Techniques

Descriptive statistics relies on various tools and techniques to analyze and interpret data. Some of the common tools include measures of central tendency and variability discussed earlier, as well as graphs and charts.

Graphs and Charts

Graphical representations of data are an essential aspect of descriptive statistics. They provide a visual representation of data, making it easier to identify patterns and trends. Some of the commonly used graphs and charts include histograms, bar charts, and scatter plots.

Histograms

Histograms are graphical displays of continuous data that show the frequency of values within specific ranges, also known as bins. The x-axis represents the range of values, while the y-axis shows the frequency or count of values falling within each bin. Histograms are particularly useful in identifying the distribution of data and detecting outliers.

Bar Charts

Bar charts, similar to histograms, show the frequency of categorical data. However, unlike histograms, bar charts have distinct bars for each category, making them more suitable for displaying qualitative data. The height of each bar represents the frequency or count of each category.

Scatter Plots

Scatter plots are used to visualize the relationship between two continuous variables. Each data point is represented by a dot on the graph, with the x-axis showing the value of one variable and the y-axis representing the value of the other variable. Scatter plots are particularly useful in identifying correlations between variables.

Measures of Association

Measures of association, also known as correlation coefficients, are used to quantify the relationship between two variables. The most common measure of association is Pearson’s correlation coefficient, which measures the linear relationship between two continuous variables. It ranges from -1 (perfect negative correlation) to 1 (perfect positive correlation), with 0 indicating no relationship between the variables.

Interpreting Central Tendency Measures

Once data has been collected, organized, and summarized, the next step is to interpret the results. Measures of central tendency provide valuable insights into the typical or average value of a dataset and help identify the center or midpoint of the data.

One key point to note when interpreting measures of central tendency is that they can be affected by extreme values in the data, also known as outliers. Outliers are data points that fall significantly above or below the majority of data points and can skew the results. As such, it is essential to understand the distribution of data before drawing conclusions based on central tendency measures.

Analyzing Variability and Spread

In addition to measures of central tendency, measures of variability provide valuable insights into the spread of data points. They help identify how closely data points are clustered around the mean and provide an indication of the range or variation of values within a dataset.

Analyzing variability and spread is crucial in understanding the characteristics of a dataset. It can help identify outliers, detect patterns, and make predictions. Just like measures of central tendency, measures of variability can be affected by extreme values, so it is essential to consider the distribution of data when interpreting the results.

Real-World Examples and Case Studies

To better understand the role of descriptive statistics in post-event analysis, let’s look at some real-world examples and case studies.

Example 1: Customer Satisfaction Survey Analysis

A company conducted a customer satisfaction survey to gather feedback from its customers. The results of the survey were then analyzed using descriptive statistics techniques to identify areas for improvement.

The first step was to organize the data into a frequency distribution table and calculate measures of central tendency. The mean score for overall satisfaction was 3.8 out of 5, indicating that most customers were satisfied with the company’s services. However, when looking at specific categories, the mean scores varied significantly, indicating some areas for improvement.

Next, measures of variability were calculated to understand the spread of data. It was found that the standard deviation for overall satisfaction was 0.9, indicating that there was not much variation in scores. However, for specific categories, the standard deviation was higher, indicating that there was more variability in customer opinions.

By utilizing descriptive statistics, the company was able to identify which areas needed improvement and make data-driven decisions to enhance their services.

Case Study: Predicting Sales using Descriptive Statistics

A retail store wanted to predict sales for the upcoming holiday season. They collected sales data from the past three years and used descriptive statistics to analyze trends and make predictions.

Firstly, they created a frequency distribution table to get an overview of sales for the previous three years. They also calculated measures of central tendency and found that the mean sales for the past three years were $100,000. Next, they looked at the range of values and found that sales had been increasing each year, indicating a positive trend.

To make predictions for the upcoming holiday season, they utilized measures of association and found a strong positive correlation between sales and the number of promotions run during the holiday season. Based on this, the company decided to run more promotions this year, resulting in a 15% increase in sales compared to the previous year.

Common Pitfalls and Challenges

While descriptive statistics is a powerful tool in post-event analysis, there are some common pitfalls and challenges to be aware of.

Misinterpretation of Results

One of the most significant challenges in descriptive statistics is misinterpreting results. As mentioned earlier, measures of central tendency and variability can be affected by extreme values, making it crucial to understand the distribution of data. Without considering this, analysts may draw incorrect conclusions and make decisions based on flawed assumptions.

Limited Sample Size

Another challenge when using descriptive statistics is having a limited sample size. In such cases, the results may not accurately represent the entire population, leading to biased conclusions. It is essential to have a large enough sample size to ensure reliable results.

Lack of Appropriate Data

Lastly, descriptive statistics relies heavily on the availability of appropriate data. If the data collected is not representative of the population or does not include all relevant variables, the results may not provide meaningful insights. Therefore, it is crucial to carefully design data collection methods and ensure that all necessary data is included.

Conclusion and Best Practices

Descriptive statistics play a crucial role in post-event analysis and provide valuable insights into trends, patterns, and correlations within a dataset. By collecting and organizing data, utilizing tools and techniques, and interpreting results effectively, researchers and analysts can make data-driven decisions and draw accurate conclusions.

When conducting post-event analysis, it is important to keep in mind the type of data being collected, the distribution of data, and potential outliers. By avoiding common pitfalls and challenges, data analysts can effectively utilize descriptive statistics for effective decision-making processes.

In conclusion, mastering descriptive statistics is an essential skill for any researcher or data analyst, and by understanding its principles and best practices, one can unlock valuable insights from vast amounts of data.